Apparently, Windows Vista cost Microsoft about $6 billion to develop. Check out the full article here.

Friday, May 21, 2010

Sunday, March 07, 2010

Another Long Wait

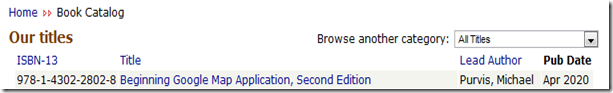

A while ago, I blogged about an Apress book on Apple Mac OS X that was due to be released in Aug 2020. Looks like there is another book on the way which we will have to wait with baited breath for:

Wow, only another 10 years to go before this badboy is unleashed on the public. Can’t wait. :)

Saturday, February 27, 2010

Good Blog Series On Using MSpec

Over on the Elegant Code blog there are some nice articles on MSpec which may be of interested to some of you. For those of you not familiar with MSpec, it’s a great open source BDD (Behaviour Driven Development) framework for .NET. Check it out.

Sunday, February 21, 2010

Time To Embrace The Cloud

You can’t help but notice the big increase in coverage for cloud computing both in IT news sites and developer blogs/forums. With the economic climate having been given a real beating the last couple of years, IT shops are looking into ways to reduce costs (hopefully by avoiding redundancies). Enter cloud computing. It’s a great idea and, frankly, one that is probably going to change the face of IT in the coming years.

As a developer, I accept that it’s only a matter of time before I will need to learn about developing for a cloud architecture. So rather then wait until that moment arrives, I’ve been doing a little bit of reading here and there about Windows Azure, Microsoft’s cloud offering which was made live a few weeks ago. I thought I’d post some links here to help out (both as notes for myself and to aid other people who might land on this blog).

Channel 9

Channel 9 - Cloud Cover Episode 1

PDC09 Videos

Lap Around The Windows Azure Platform

Development Best Practices and Patterns for Using Microsoft SQL Azure Databases

Scaling out Web Applications with Microsoft SQL Azure Databases

Patterns for Building Scalable and Reliable Applications with Windows Azure

Windows Azure Tables and Queues Deep Dive

Microsoft SQL Azure Database: Under the Hood

Windows Azure Present and Future

Windows Azure Blob and Drive Deep Dive

Windows Azure Monitoring, Logging, and Management APIs

Developing Advanced Applications with Windows Azure

Enabling Single Sign-On to Windows Azure Applications

Building Hybrid Cloud Applications with Windows Azure and the Service Bus

Lessons Learned: Migrating Applications to the Windows Azure Platform

Automating the Application Lifecycle with Windows Azure

The Future of Database Development with SQL Azure

Lessons Learned: Building On-Premises and Cloud Applications with the Service Bus and Windows Azure

Lessons Learned: Building Scalable Applications with the Windows Azure Platform

Lessons Learned: Building Multi-Tenant Applications with the Windows Azure Platform

Introduction to Building Applications with Windows Azure

SQL Azure Database: Present and Future

Tips and Tricks for Using Visual Studio 2010 to Build Applications that Run on Windows Azure

The Business of Windows Azure: What you should know about Windows Azure Platform pricing and SLAs

Misc

Windows Azure Tools for Microsoft Visual Studio 1.1 (February 2010)

Windows Azure Platform Training Kit - December Update

Migrating an Existing ASP.NET App to run on Windows Azure

Seven things that may surprise you about the Windows Azure Platform

OakLeaf Systems

Sunday, February 14, 2010

Nice BDD Naming Convention For MSTest

If you like to use Behaviour Driven Development (like me :) then I assume you are using a naming convention for your tests, maybe like this:

- Given an empty shopping cart

- When I add an item

- Then shopping item count should be 1

I really like using the above naming convention due to it expressiveness – I can instantly see what a test is actually testing and the context under which that test is running. I’ve been doing some research into the best practise for using BDD naming for MSTest unit tests and found this blog post. The author has given a very neat example of how to use a base context class which you can then include in your tests. The idea is that you have the following class which sets out the basic structure of a test:

using Microsoft.VisualStudio.TestTools.UnitTesting; namespace UnitTest { public abstract class ContextSpecification { public TestContext TestContext { get; set; } [TestInitialize] public void TestInitialize() { Context(); BecauseOf(); } [TestCleanup] public void TestCleanup() { Cleanup(); } protected virtual void Context() { } protected virtual void BecauseOf() { } protected virtual void Cleanup() { } } }

Those of you who have used frameworks such as MSpec will find the above very familiar (I’m a big fan of MSpec and use it for some of my projects at work, but none of my colleagues us it, hence my need for finding an MSTest way of BDD’ing my unit tests). You have the usual MSTest related code (TestContext, TestInitialize, etc) declared in this class, but notice the Context, BecauseOf and Cleanup methods. We’ll take a look at how those are used now. Check out the following unit test:

namespace UnitTest { public static class GetPersonNameSpecs { public class GetPersonNameSpecsContext : ContextSpecification { protected Person sut; protected string actual = String.Empty; } [TestClass] public class when_name_has_not_been_given : GetPersonNameSpecsContext { protected override void Context() { sut = new Person(); } protected override void BecauseOf() { actual = sut.GetFullName(); } [TestMethod] public void returned_name_should_be_empty() { Assert.AreEqual(String.Empty, actual); } } [TestClass] public class when_name_has_been_given : GetPersonNameSpecsContext { protected override void Context() { sut = new Person("Jim"); } protected override void BecauseOf() { actual = sut.GetFullName(); } [TestMethod] public void returned_name_should_be_Jim() { Assert.AreEqual("Jim", actual); } } } }

As per the suggestion of the blog author, I have a static class named GetPersonNameSpecs and inside that class is the real meat and bones – two test classes which contain the unit tests. See how I make use of the Context and BecauseOf methods - with Context I can do my pre-test initialisation (creating class instances, or if I was using a mock framework, creating mock objects), then in the BecauseOf method I invoke the code to be tested. The final phase of the test, the assertion, is what’s declared with a [TestMethod] attribute. Since in ContextSpecification both Context and BecauseOf are called during TestInitialize, there is no need for us to apply any attributes to our version of those methods.

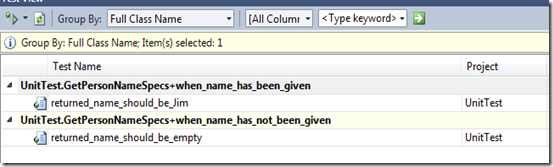

The advantage of this structure is it’s readability, take a look at how the above tests look like in my test viewer window:

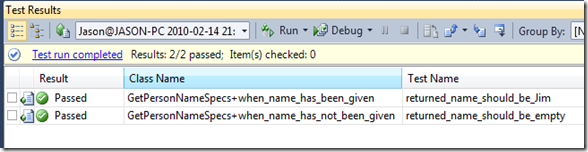

and in the results window:

Now, I don’t know about you, but I know that if I had to return to these tests in 6 months time, I will find it much easier to deal with.

Thursday, February 11, 2010

Fancy Having A Go at .NET 4.0 Exams?

- 70-511 TS: Microsoft .NET Framework 4, Windows Application Development

- 70-513 TS: Microsoft .NET Framework 4, Windows Communication Foundation Development

- 70-515 TS: Microsoft .NET Framework 4, Web Applications Development

- 70-516 TS: Microsoft .NET Framework 4, Accessing Data with ADO.NET

- 70-518 Pro: Designing and Developing Windows Applications Using Microsoft .NET 4

- 70-519 Pro: Designing and Developing Web Applications Using Microsoft .NET Framework 4

Tuesday, February 09, 2010

CLR via C#, 3rd Edition Out Now

I’m very happy to see that the newest edition of Jeffrey Richter's awesome book about the internals of the .NET CLR is now available. If you have read the 2nd edition then you will already know what a fantastic resource that book is for improving your understanding of how the CLR works. The new edition targets C# 4.0 and .NET 4.0, which is coming just around the corner. (In fact, the RC for VS2010 is out now for MSDN customers, and will be available to the public on Feb 10. Check out this Channel9 video for more details)

Tuesday, January 26, 2010

“The Dark” Comic Signing Event

A very good friend of mine, Chris Lynch, will be at Ace Comics in London on March 20th to sign copies of “The Dark”, which is an exciting new comic book series written by Chris with amazing artwork provided by Rick Lundeen. Festivities start at 12pm. This is your chance to get a signed copy of a great series in the making – a chance not to be missed, so c’mon! :)

Monday, January 25, 2010

Improve Readability of !GCRoot Command

One of the most powerful command available in the SOS.dll is !gcroot. It can help you figure out why an object is stuck in memory and what object(s) is referencing it and keeping it alive. The one problem with !gcroot is that it’s output can be very difficult to navigate through. I remember when I first used this command to try and debug an issue for an application at work, at first I was excited at getting the chance to use this command on a “real world” issue. However, that excitement turned to bewilderment when I saw the output of !gcroot and I just sat there for a few moments whilst I tried to work out what exactly the output was trying to tell me.

Well that was then, and since those days I’ve learned a little bit more about how to read !gcroot’s output. Studying blogs such as this one has helped a lot. Today I was reading John Robbins' blog and I saw a link to this tool, which is an object graph visualizer which runs in VS 2010. The author has created a video to demonstrate this tool and I must say, it looks very interesting! I suggest you check it out.

Sunday, January 10, 2010

Does Learning Assembly Language Matter Anymore?

When I started out to teach myself how to program I was always a bit bewildered by assembly language. I often looked at example code from online forums and starred at strange syntax such as this:

mov EAX, [EBP+8]

lea EDS, EAX

I thought '”How can you possibly write software using this stuff?”. Obviously I was being naive since compiling a Visual Basic, or C++ Builder application (my two favorite development tools at the time – others included PowerBASIC) ultimately was generating exactly this “stuff” which the CPU could make use of. As I became more proficient as a programmer, I noticed that I was looking more and more at assembly language code during my debugging sessions. Very often in the C++ Builder IDE I would be watching the values of the EAX and ECX registers, so that I could see the return value of a function or the current loop index value. Little did I know that I was starting to appreciate more the power of this language and what could be achieved.

Back in the early 2000’s I downloaded MASM32 which is a Microsoft assembler and RadASM, a great IDE for writing assembler code. With those tools I was able to write very simple assembler stuff, mainly DLL files that I could use with my Visual Basic 6 applications. It was so cool to see my VB6 code call an assembler routine, passing it a string that was displayed in a message box – a message box created in pure assembly code!! (I guess you had to be there :) What I was learning by doing this was how different calling conventions worked, how to build a DLL file using the assembly source and static library references. By passing a parameter from my VB code, I had to learn how to navigate a stack frame by using the ESP and EBP registers to get to the parameter’s address and grab its content in order for MessageBox to use it. I learned that:

mov EAX, [EBP+8]

actually meant “move the contents of memory address EBP+8 into the register EAX – in other words dereferencing a memory address. Having done a fair amount of C/C++ programming and using pointers, I was getting under the hood experience of how pointers worked at the assembler level. This actually helped me understand pointers better.

OK, so what am I getting at here?

In this day and age of managed runtimes and virtual machines, .NET languages, PHP, Perl, PowerShell, etc, some developers might be asking themselves “what’s the point of learning assembler? It’s not like I’m ever going to use it!”. That might very well be the case, but bear in mind this thought – without good assembly language developers, there would be no operating systems. Let me explain. Taking into account Windows and Linux for example, the vast majority of the codebase for these OS’s are written in C/C++. I would place a very large bet that a small percentage of the code is written in assembler. Assembler code must exist somewhere in the OS since talking directly to hardware would not be possible. Without those hardcore assembler developers, who’s going to look after this code? If there’s no new talent on the horizon, that would be a deficit to the OS developers who rely on assembler programmers who can churn out this low-level communications code which is vital for the OS’s existence.

Take computer games, there’s bound to be a lot of assembler code in the source for computer games – for example, code to optimize the graphics algorithms so that the frame rate can be really high. A lot of games are written in C/C++ but nothing beats assembler for pure speed. Also you sometimes can’t as easily take advantage of CPU specific instructions from a higher level language, only coding in assembler can give you access to those instructions.

One of the most important areas where assembly language experience counts a lot is crash dump analysis. In my early years I would often see a BSOD and wonder “how can you tell what’s broken just by looking at this crap?!… What’s a bugcheck and how what’s this stack frame rubbish?” Now things are different. I’ve been reading blogs such as Crash Dump Analysis and the NT Debugging blog to help me understand how to analyze and interpret the information in dump files. I’ve also taught myself how to use WinDbg which is a free debugger for Windows. I’ve spent a lot of time recently working with SharePoint 2007, and believe or not, having WinDbg and assembly language experience has come in very handy! How? Well, SharePoint 2007 isn’t the most, how shall we say, “consistent” platform to use. You might get an exception one day that has the following message:

“Something's happened, it’s broken the site, so… there you go”.

(OK, not what you actually get, but in some cases the exception message is totally useless).

When I see an exception message which is useless, I attach WinDbg to the w3wp.exe process running the “broken” SharePoint and I can see the real exception that caused a problem, and sometimes I might have to step into the running code at assembler level to see what’s going on. I don’t have to this often, but the fact that I have this in my arsenal has saved me a couple of times from punching the wall the frustration as to why I’m getting an error.

I strongly believe that learning assembler makes you a better developer, but that’s just me. Check out the following Stack Overflow questions on the matter, see what you think:

Is learning Assembly Language worth the effort?

Why do we teach assembly language programming?

Why do you program in assembly?

Should I learn Assembly programming?

Is there a need to use assembly these days?

What are the practical advantages of learning Assembly?

The Death of Personal Computer World Magazine

One thing that stood out most about PCW was the diverse topics which were included in the back pages, such as a monthly column on mathematics. Now, I don’t recall off the top of my head what exactly was discussed in that column, but I can’t think of another magazine at the time (or since) that offered such as section. I thought it was pretty cool, regardless of the fact that I understood very little of it!

Over time I bought the magazine less and less, to a point when I stopped buying it entirely. This wasn’t a decision I made point blank, it just kind of happened organically. A couple of years ago I was browsing through PCW in my local Tesco; I noticed how the magazine had suffered the decline like many other computer magazines – not as much content (I think it barely reached 100 pages). It still had many reviews and such, but it just didn’t feel quite as “heavy” as it did in the 90’s. It felt there was something missing. Even so, it came as a bit of a surprise that in the summer of 2009 I noticed that my local Tesco didn’t seem to be stocking PCW anymore. A recent search on Google lead me to this page. Here Gordon Laing give his postmortem on PCW and why it died off.

This is a real shame. Yes, I wasn't buying the magazine each month, but still, I felt like an old friend had vanished forever (over the top I know, but you know what I mean :) Other mags such as PC Pro don’t feel like they give the same value as PCW did. PCW does still exist on the web, but it’s publishing days are well and truly over :(.

Saturday, January 09, 2010

Nice Application To Handle Requirements Management

One thing that’s been tricky at our company has been requirements management. There’s no problem gathering requirements and creating a document that describes them, the trouble comes when we need to create TFS (Team Foundation Server) work items based on those requirements. There’s always a chance of a requirement being lost (especially true for very large documents) and not getting a TFS work item created for it – a big problem indeed. Certainly there have been cases when a missed requirement was not discovered until near the end of the a project, a rather large ‘ouch’ moment!

In the past, a colleague of mine did tinker with a prototype Word addin that allows a user to highlight sections of a Word 2007 document and mark it as a requirement. He never finished it, mainly due to time, plus the scale of what we needed as a requirements management solution needed something more then just a simple addin. However, I’ve just found this very interesting product – ‘TeamSpec for TFS Requirements Management’. I’m keen to have a look at this since it could be just the ticket for us. Basically this application allows you to integrate Word 2007 documents with TFS, giving you the ability to create work items and link them into the document. The advantage is that you are linking directly between the document and the work items, which means, for example, when viewing the document you get a live update of that work item’s state (if the ‘State’ field has been included in the document).

Not only that, but it also saves time since currently we must write the requirements document, then someone has to go through the document again, picking out each requirement, and creating work items for them. Very tedious; this was crying out for a better solution! There’s a nice explorer window that appears in Word which shows you the work items linked into the document:

On the right is the explorer window which is showing 4 work items. Each work item is described in the document in the bullet points. You can pick and choose which work item fields to display in the document, each field in binded to the work item in TFS which means that any updates to a work item in TFS is synchronized in the document. There’s a lot of cool features in this product, check out the site and see for yourself.